The EFF presented their proposal how to improve the security of SSL/TLS and the internet PKI infrastructure. To understand their proposal, one needs to understand how PKI in the internet works today:

The EFF presented their proposal how to improve the security of SSL/TLS and the internet PKI infrastructure. To understand their proposal, one needs to understand how PKI in the internet works today:

Public Key Cryptography

In cryptography, you can usually encrypt and decrypt  data. In the past, encryption and decryption used the same key. Starting from the 70s, a new class of encryption/decryption algorithms was invented, the public key encryption algorithm. Instead of using the same key for en- and decryption, these algorithms use different keys for en- and decryption. During key generation, two keys are generated: A public key, that is used to encrypt data, and can be given out to everybody in the word, and a corresponding secret key, that must be kept hidden by the owner. Everybody who has access to the public key can encrypt data, but only the owner of the secret key is able to decrypt it.

There are many algorithms, for example RSA and ElGamal are the most famous public key encryption algorithms, while other algorithms like McEliece and Rabin are less well known.

Besides encryption, there are also digital signature algorithms. Again, a public and a private key is generated. The private key can be used to generate a digital signature on a document. The public key can then be used to verify the signature on the document. A signature on a document should guarantee that the document was really signed by the holder of the private key, and was not altered afterwards.

PKI

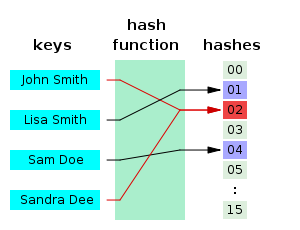

These ideas sound simple at the first look, but in practice, getting a public key of a person or company is not that easy. Just publishing your public key in some kind of web forum or on your facebook page is not enough. Everybody would be able to create a facebook page for another person, and then posting a fake public key on that page, or under that persons name on a web forum. So we need a way to establish a binding between a public key and a person or identity (a company name, a domain name or an email address). One solution would be to meet everybody in person who you want to communicate with, but it doesn’t scale well, and not everybody wants to fly to San Jose, California, just to get the public key for paypal.com.

For these job, Public Key Infrastructue (PKI) and X.509 Certificates have been invented. A Certification Authority (CA) is an organization, that verifies the identity of a person, and that this person is in possession of a private key. After this has been confirmed, the CA issues a X.509 certificate. That certificate contains the corresponding public key of that person, and it’s identity, and this information is signed using the CAs private key. Everybody who thinks that this CA does a good job in verifying the identity of persons, and is in possession of that CAs public key can verify that signature. As from now on, one only needs to trust a CA. One can simply give away the certificate issued by a CA, and everybody can get the public key from the certificate, and verify that it really belongs to that person, by verifying the signature of the CA. Today, there are hundreds of CAs active on the internet, and every web browser comes with a pre-installed list of trustworthy CAs and their public keys.

SSL/TLS

To encrypt HTTP traffic and to prove the autenticity of a website, the SSL/TLS protocol was created. When a session to a web server is established, the web server usually provides a digital certificate containing the public key of that web server. The web browser verifies the signature on that certificate, and that the identity in that certificate matches with the servers name it want’s to connect to. If everything is fine, the public key in that certificate is used to establish a secure session with that web server using some kind of key derivation scheme. (I won’t go into detail here)

The current state of PKI in the internet

At the first look, this sounds like a perfect solution. Whenever I want to talk privately with paypal, I just point my web browser to https://www.paypal.com/, it automatically connects to the server, gets a certificate, verifies that is has been correctly signed by a trustworthy CA, and the identidy in the certificate matches the expected servers hostname.

Attack vectors

However, there are multiple problems with that system. Just to mention one example: There are hundred of CAs active in the internet, and your web browser trusts every single one of them. Every CA is allowed to issue a certificate for every domain name in the internet. For example the national Chinese stat CA is allowed to issue a certificate for http://www.defense.gov/, which is the website of the ministry of defense of the united states of america. Also, the verification done by most CAs is minimal. For many CAs, it is sufficient if you can receive a mail for hostmaster@domain.tld, to get a certificate for domain.tld. There are multiple ways how you can attack this:

CAs

First of all, you may find a bug in the CAs website or email server, that allows you to get access to the certificate issuing software, bypassing these checks.

DNS

Also, you might be able to attack a DNS server serving the zone-file for domain.tld, that allows you to reroute mail for hostmaster@domain.tld on the DNS level. This allows you to get a certificate for domain.tld too.

Routers

Routers, especially those using BGB or a similar protocol might be tricked into rerouting the traffic for the mail server of domain.tld to your network. This way, you can intercept the mail and get your certificate too.

Crypto

Besides that, weak cryptography algorithms like MD5 have been used by some CAs, and this has been used to generate a rouge certificate too.

The EFF solution

To improve the security of PKI, the EFF has presented a proposal:Â Sovereign Keys

Sovereign Keys should make it harder for an attacker to generate a new certificate for an HTTPS website, without the cooperation of the legitimate site operator. The main building block of Sovereign Keys are so called timeline servers. These timeline servers are append-only databases, meaning that one can only add entries to the database, but never modify or delete them. These timeline servers could be operated by different entities like the EFF itself, or Mozilla, Google or Microsoft.

To use Sovereign Keys, the side administrator obtains an X.509 certificate as usual. Then he generates a new key, the so called sovereign key. He uploads the key with the certificate to a timeline server. The server operator checks, if that certificate is really issued by a valid CA and no other sovereign key has been added previously, and adds the sovereign key with the hostname of the certificate to the database.

When a client connects to the website, he also requests all database entries belonging to that hostname from a timeline server. In parallel to that, a SSL/TLS connection is established. The Server delivers the server certificate to the client, with an additional signature created with the sovereign key. The client can then check, if this signature can be verified with the sovereign key retrieved from the timeline server.

More details

The full protocol is a little bit more complex, because it needs to deal with revocation, privacy, mirroring and load balancing the timeline servers and many more things. It has not yet been finalized, but a draft of the protocol can be downloaded from:Â https://git.eff.org/?p=sovereign-keys.git;a=blob_plain;f=sovereign-key-design.txt;hb=master

Summary

For me, this looks like one of two solutions you need to improve the general security of SSL/TLS. Sovereign keys is a great solution for website operators that care about the security of their users. It will not help a user, if the website he connects to does not use it. For these cases, a different solution should be used, like checking if multiple computers in the internet get the same certificate from the server.