Julian Wälde (zeri) and Alexander Klink (alech) presented a very nice way how to bring down many popular websites at 28C3. The idea is quiet simple, effective and general. Many programming languages, especially scripting languages, frequently use hash tables to store all kinds of data.

Hash Tables

Hash tables (which are very well explained on wikipedia) are data structures, that store key-value pairs very efficiently. Adding new entries to the table, looking up entries in the table for a given key, and deleting entries are usually executed in $latex O(1)$ in best and average case, which means that the time for each operation is usually constant, and doesn’t depend on the number of entries stored in the table. Other data structures like binary tree type structures need $latex O(\log(n))$ entries in the average case, which means that they need more time for these operations when a lot of entries are stored. (n is the number of entries stored) However, there is a drawback, hash tables need $latex O(n)$ operations for these operations in the worst case, compared to $latex O(\log(n))$ operations for binary trees, which means that they are much slower in very rare cases.

Hash tables (which are very well explained on wikipedia) are data structures, that store key-value pairs very efficiently. Adding new entries to the table, looking up entries in the table for a given key, and deleting entries are usually executed in $latex O(1)$ in best and average case, which means that the time for each operation is usually constant, and doesn’t depend on the number of entries stored in the table. Other data structures like binary tree type structures need $latex O(\log(n))$ entries in the average case, which means that they need more time for these operations when a lot of entries are stored. (n is the number of entries stored) However, there is a drawback, hash tables need $latex O(n)$ operations for these operations in the worst case, compared to $latex O(\log(n))$ operations for binary trees, which means that they are much slower in very rare cases.

How the attack works

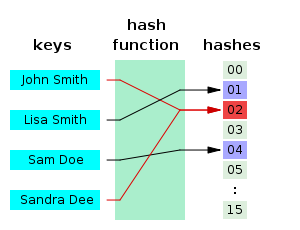

Many hash table implementations are very similar. They use a deterministic hash function to map a key k (usually a string or another complex data structure) to a single 32 or 64 bit value h. Let l be the size of the current table. Then  h%l is used as an index in this table, where the entry is stored. If more than one entry is mapped to the same index, then a linked list of entries is stored at this index position in the table. If many different keys are all mapped to the same hash value h, then all these entries are stored at the same index of the table, and the performance of the hash table goes down to a simple linked list, where all operations need $latex O(n)$ time.

Performance

Just to get an impression, how much CPU time it takes to process such a request on a Core i7 CPU with PHP, assuming that the processing time for a request is not limited (usually, PHP limits the processing time for a request to 1 minute):

Just to get an impression, how much CPU time it takes to process such a request on a Core i7 CPU with PHP, assuming that the processing time for a request is not limited (usually, PHP limits the processing time for a request to 1 minute):

- 8 MB of POST data – Â 288 minutes of CPU time

- 500k of POST data – 1 minute of CPU time

- 300k  of POST data – 30 sec of CPU time

So you can keep about 10.000 Core i7 CPU cores busy processing PHP requests using a gigabit internet connection. Alternatively for ASP.NET, 30,000 Core2 CPU cores, or for Java Tomcat 100,000 Core i7 CPU cores, or for CRuby 1.8 1,000,000 Core i7 CPU cores, can be kept busy with a single gigabit connection.

Even though this blog is named cryptanalysis.eu, these hash functions used for these tables are not cryptographic hash functions like SHA256, instead simple hash functions like djb2 are used, and it is very easy to find many inputs mapping to the same output. Julian and Alexander did a great job with checking many programming languages used for web applications for their hash table implementation and hash functions. For all of them they checked, they managed to find a lot of keys mapping to the same output, except for Perl. Perl uses a randomized hash function, i.e. the hash doesn’t only depend on the key, but also on an additional value, that is chosen at startup of the application at random. All other languages also store the query parameters send in an HTTP GET or POST request in an hash table, so that a request with many query parameters all mapping to the same hash value will slowly fill such a hash table, before the first line of code written by the application programmer will be executed. Filling this hash table will usually take several minutes on a decent CPU, so that even a fast web server can be kept busy using a slow connection.

Countermeasures

One may ask, can this problem be fixed by using a modern hash function like SHA256? Unfortunately, this will not solve the problem, because hash tables are usually very small, like $latex 2^{32}$ entries, and one can still find a lot of values where SHA256(m) maps to the same value modulus $latex 2^{32}$. Also fixing this in the parser for the parameters string seems to be a bad solution, because many programmers use hash tables to store data retrieved from a user or another insecure source. From my point of view (which is also the opinion of the speakers), the best solution is to use randomized hash functions for hash tables. However, there are several kind of fixes deployed by the vendors:

Limiting HTTP POST and GET request lengths (Microsoft ASP.NET)

Microsoft suggests to limit the HTTP request length. Most applications don’t require very long HTTP requests, except for file uploads.

<configuration> <system.web> <httpRuntime maxRequestLength="200â€/> </system.web> </configuration>

As long as you don’t process data in a hash table from any other sources, except from the HTTP request (like external URLs), this should prevent the basic form of the attack.

Microsoft has also released an emergency patch against this attack:Â http://technet.microsoft.com/en-us/security/bulletin/ms11-100.mspx

Limiting the number of different HTTP request parameters (PHP, Tomcat)

PHP has added a new configuration variable max_input_vars, that can limit the number of parameters. This is similar to the first solution, except that here, not the total length of the request is limited, instead the number of different parameters that can be submitted in a single request is limited. This can be easier to use than limiting the length of the HTTP request, because application programmers usually know, how many parameters they need, while the maximum length of the request is harder to predict. However, if a single request parameter contains a data structure, that is later parsed and put into a hash table, the application might still be vulnerable.

Apache Tomcat also deployed a similar fix, that limits the number of parameters to 10000 by default.

Using different data structures

As mentioned before, other data structures (binary tree type structures like an AVL-tree gurantee, that all operations are always executed in $latex O(\log(n))$, and never need $latex O(n)$ time. This is what for example Daniel J. Bernstein suggests. This completely fixes the vulnerability, but requires fundamental changes to the runtime/compiler/interpreter, and might be harder to implement.

More Information

More information can be found on Twitter, using the hashtag #hashDoS or from the user @hashDoS!

oCERT has a good summary about affected and fixed software versions:Â http://www.ocert.org/advisories/ocert-2011-003.html

Video and Paper

The video of the talk is available on YouTube. There is an advisory on full disclosure that describes the attack in detail:Â http://permalink.gmane.org/gmane.comp.security.full-disclosure/83694